Meet Tiangong and its kin. Here’s another article describing its first major public appearance.

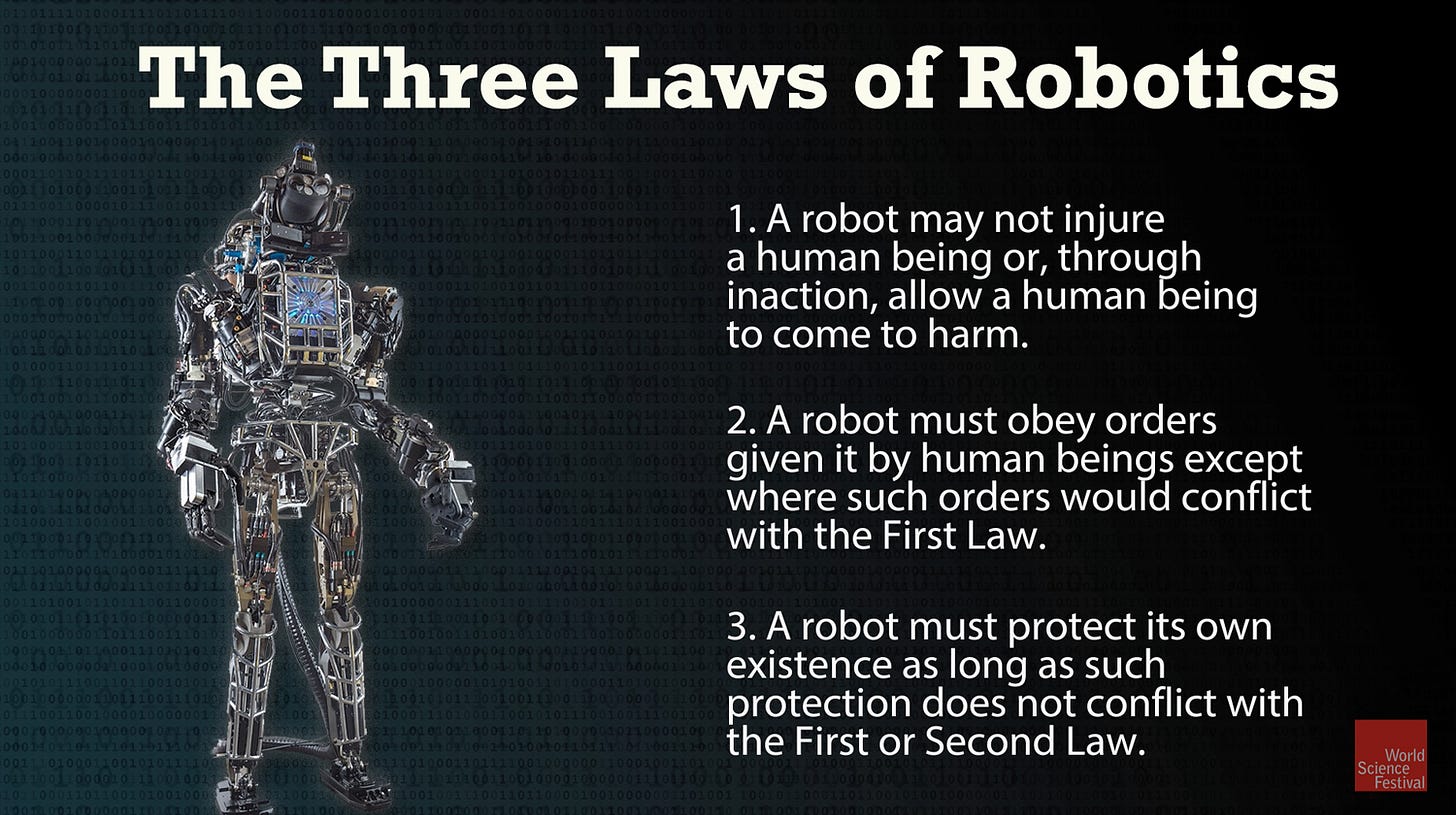

Their build differs little from that designed for the movie I Robot that was loosely based on a series of Isaac Asimov’s Robot Series of SciFi books created to promote discussion within human society about such machines and the nature of their manufactured intelligence—a very necessary conversation that never arose. These machines are now entering the world where there are zero regulations in place to govern their behavior. Asimov’s Three Laws of Robotics are glaringly absent as if they never existed.

It should be very plain as to why Asimov designed the Three Laws since almost all new technologies emerging during the 20th Century were first used in military applications. In the prequels Asimov eventually wrote to accompany his famous Foundation and Empire trilogy, he explored the human societal response to robots entering the job market and taking basic jobs away from people living in societies where their basic needs were otherwise met—food, shelter and a basic income. From his POV as a writer and observer of human behavior, people would still want to be employed, even if the job seemed menial like that of a store clerk. Yes, my very short description of his work informs us that humanity must have a conversation about the employment of robots outside of factory floors, where admittedly they do amazing work while completely lacking humanoid features.

Here’s a ten-minute video of an automotive robotic assembly plant.

Now China with its very long history of seeking Harmony instead of Hegemony, I see their companies’ designing robots as assets to humanity in its quest for Harmony. The few Dr. Noes that exist would be in Taiwan given its decades of Western brainwashing, and of course Dr. No was devised by Ian Fleming. And I’m rather sure Mr. Asimov had Western society in mind when writing his books. Unfortunately as I’ve noted in my articles on AI, the West has made certain the conversation humanity needs to have won’t occur as human society collectively might say yes to something as pragmatic as the Three Laws; and as we’ve seen from Western behavior, its technocrats want to do whatever they want unilaterally without any restrictions as they make the rules up as they go along. When we look back, we see the core ideas of wokism and multigenderism were generated in the mid-1960s, while Liberal Totalitarianism was formulated soon after WW2 in the form of Animal Farm and 1984, amongst others. Now those works generated conversation and even movies that alerted human society and made the technocrats hide their ideals lest their nature become known. When will we see that appearance of these creatures:

Note the similarity in all three designs. I’ve always wondered: Why the human look? I always thought these were more formidable:

Of course, humans have already constructed and employed semi-robotic machines in warfare and are very close to employing almost 100% autonomous machines—they must still be launched by humans, but the concept of launch/fire and forget tells us where we are technically. Is it too late for the Three Laws? The BRICS and other likeminded nations still demand a conference dealing with the regulation of AI and Putin mentioned that at Kazan and Valdai Club, while Lavrov also promotes that line. On the other side, the Outlaw US Empire as usual doesn’t want any restrictions placed on what it might do—and that goes for Bioweapons as well as AI.

Look at what the Chinese are making. How soon before a robot is used to commit an act of terrorism? Is it even possible for the Three Laws to be implanted into a robot and remain implanted regardless the attempts to alter its programming? Does humanity need non-factory robots like those depicted above? How many more questions am I omitting?

*

*

*

Like what you’ve been reading at Karlof1’s Substack? Then please consider subscribing and choosing to make a monthly/yearly pledge to enable my efforts in this challenging realm. Thank You!

"Animal House?" Or, Animal Farm?

There is a problem with Asimov's conception of robotic law. While well-intentioned and indeed pragmatic, his three statements lack an actual grounding in the way robots are (and will be) technically implemented. Their software, as indeed all software known to mathematicians, is fundamentally different from human perception and sense-making. The clouds of data a machine generates can't be parsed meaningfully by itself in ways that would allow for essential features we're used to taking for granted in a human mind. They are basically sorting algorithms; more complex versions of an apple parser which puts each fruit in one of two categories, divided by weight, or size, or colour, or whatever combination of input numbers in a high-dimensional space you're using to control the robot's effectors.

The problem is that AI only resembles cognition, while at the heart of it are numbers, entirely devoid of meaning beyond their mathematical definition. Just as the big data hype ran out when it became clear to everyone that data analysis will not become fully automated anytime soon, the robot question will lead to the realization that algorithms can only recognize numbers, but not things, objects, new situations, aspects of empathy, meaning of speech, and with that, how a "human" can be identified in the first place. All work-arounds are circumstantial by their nature, and while Asimov's laws make pragmatic sense, they do not on the level of machine interaction. Therefore, I suggest to drop Asimov's wording for something more adequate to the problem.

Also, if you want robots to be peaceful, don't send them to fight. As machines they are, as all technology is, ethically neutral. It is their use which opens the angle for moral reflection.